Hello everyone!

New user, so please be nice

First off i have to say a big Thank you to the creators of this awesome software.

To introduce myself:

I come from the vent multimedia and production field. We have certain software, hardware and ways of doing things that are not necessarily compatible with broadcast as I have come to understand things.

I do believe however that the underlaying principles are pretty much the same. We both use use Genlock to sync our gear and we both push massive amounts of pixels per second at the most consistent way possible.

Long story short i am trying to play out 4k or even better 8k video via screen consumer.

After all the reading i did, i kind of get a conflicting information whether the GPU is used and what for.

Some said if VLC can play it smooth so can Caspar, If ffmpeg/ffplay can play it it will be ok. However this does not seem to be the case. Let me explain:

I have the latest Server & client working together just fine.

Playing FullHD is just fine - after all this is a child`s play in 2020, i mean phones play and record 8k …

With FullHD load on CPU&GPU is barely registrable.

4K & above things start to change dramatically.

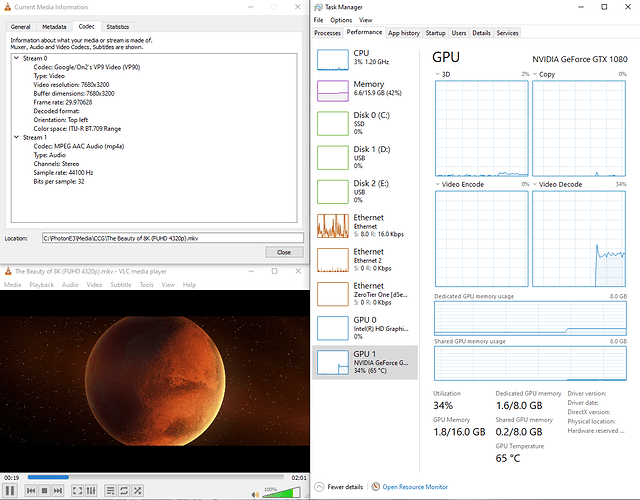

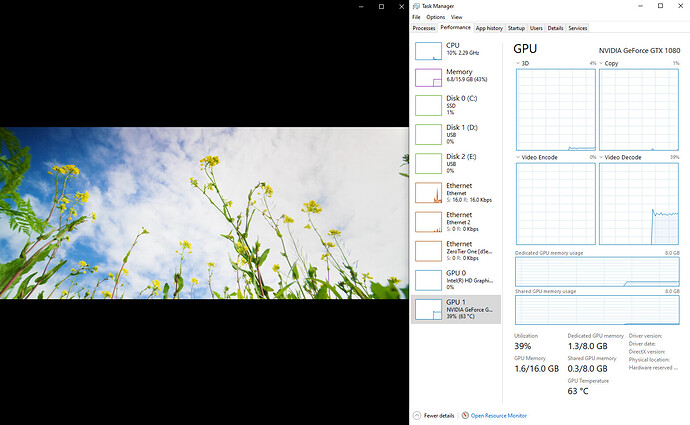

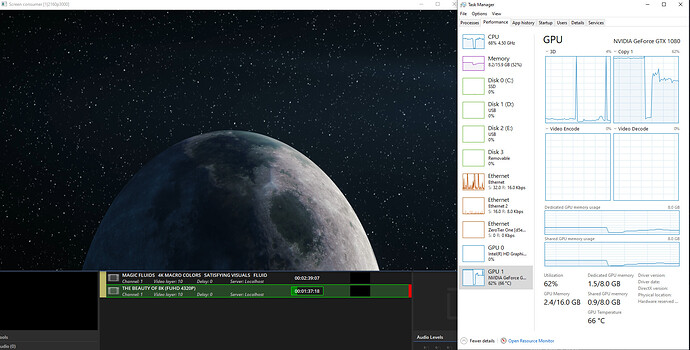

For example VLC & Windows movies & TV app play 8k file like this:

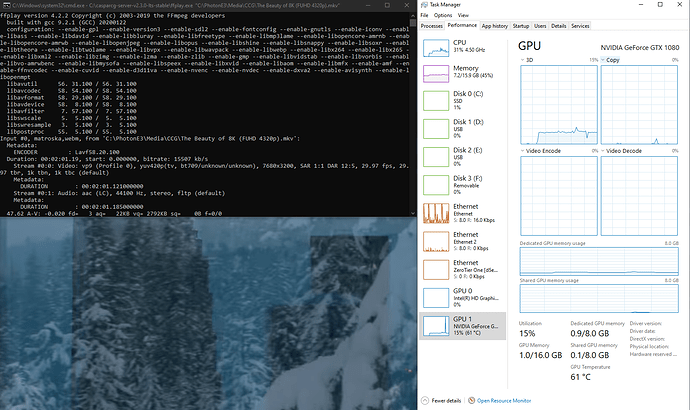

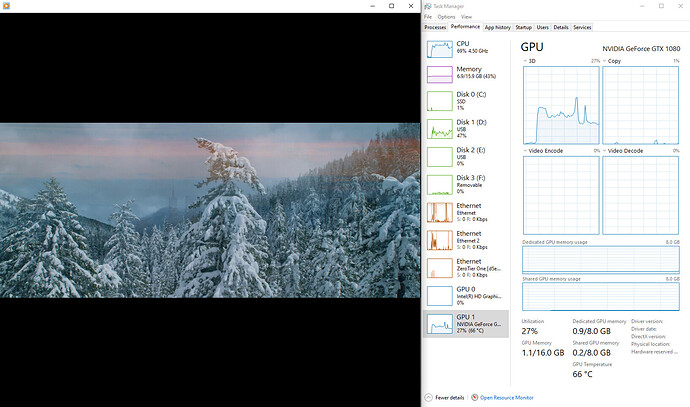

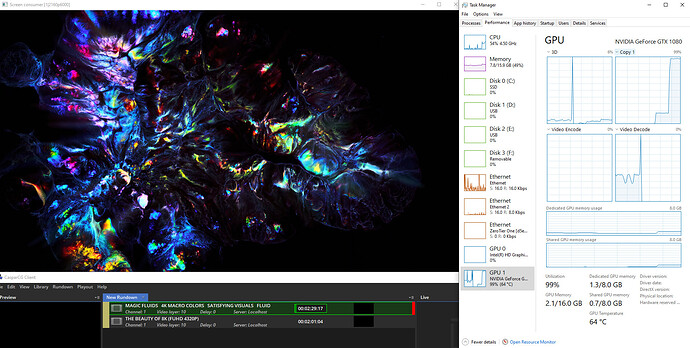

Caspar however struggles … badly. Take a note on the Copy operation in the GPU tab. It is not even the main Memory engine - Copy but it is secondary - Copy 1

Here is what i mean

It seems CasparCG even using ffmeg under the hood uses CPU to encode & then does copy operation to the GPU. Furthermore it seem it does it a number of times judging from the load.

With 4k File situation is pretty much 90% identical.

So far i have tested Every possible Codec & format combination exporting from Media encoder.

Tested on 5 different kinds of machines ranging from AMD ThreadRipper with RTX 8000 to a simple i7 7700k with a GTX 1080

In all tests Caspar seem to struggle badly with high res and screen consumer.

Black Magic output was no better either, It crashed a lot and was laggy and choppy.

Just as a note, the machines that i tested on are in regular use in configurations such as 4 8k outputs and multiple composited layers of video with 16k resolution and multiple SDI inputs all at the same time.

Software doing it is such as Resolume , Ventuz and Dataton Wathout.

Black magic devises tested 8K Pro SDI, Duo 2, Duo2Quad

Anyone willing to shed some light on this?

I am really trying to understand how Caspar Works what kind of file and hardware it is expecting .

Best regards!