Hi

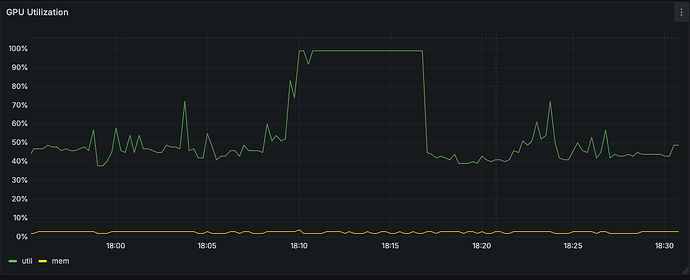

I have an issue where the GPU usage suddenly reaches 100%. When this happens, it’s stuck at 100% for a while, until the usage goes down. I think it is connected to playing SRT streams, I’m able to force it to 100% GPU usage by playing many streams at the same time and then making it do something tricky (like playing a 4k video). What makes it weird is that when the usage goes to 100% it’s locked there until I have stopped all the videos, and then for a while after. Looks like it reaches some kind of locked state where it’s not able to recover.

See the screenshot from grafana (this just plots the GPU usage from nvidia-smi)

Update: I’m pretty sure this is because of SRT. I’m able to replicate it with only 4 SRT producers running, when stopping and starting and trying to fetch SRT feeds that are not exists. I have actually been having similar issues with VMIX, that was fixed when they updated their version of libsrt.

Have anyone had similar issues? Is there anything I can do to find out where the issues are. Will I get fewer issues if I use srt-live-transmit or ffmpeg to convert the srt feeds to UDP?

Edit: I’m also getting the issues more often now when I use a SRT consumer.

Please post a bug report on Caspar’s GitHub page.

Okay, I’ll try to create something that is reproduceable by other, and I will probably wait till after 2.5, in case it’s already been fixed since 2.4

1 Like

You can still describe it for 2.4 and how to reproduce. Any developer that looks at it can reproduce with the version you used and check 2.5 and master branch builds to see if anything is different.

I’ll try to create something that can be reproduced by others