I’m not surprised 2.07 has problems with 4K - go to builds.casparcg.com

great, opened, thought it’s a windows tool

I am going to check if I can figure something there

I mean, I’ve tried 2.07 and 2.1 and the last build there

casparcg-server-3294513072beb861a087429885ea192ff715e73c-windows

and also I download the master soucrecode to build for testing

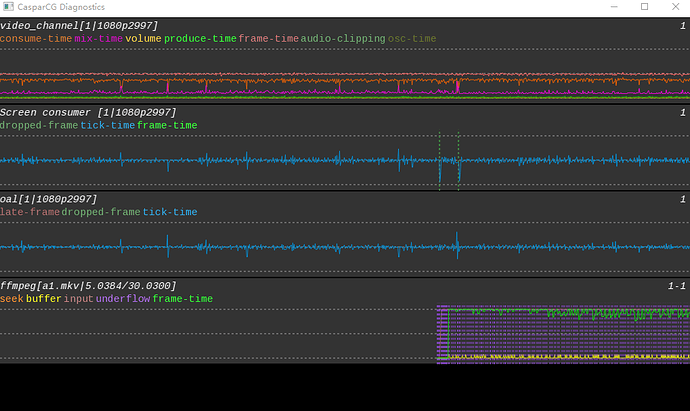

seems the frame-time is the problem?

I looked into the code, seeing a lot “fps * 0.5f” code there, I guess it means to let every frame decode time limit to half video actully time (to make sure decoding time will not be longer than video play time)

I am not sure for this, but I did try to edit this 0.5f into 0.99f, still not working

Underflow is likely diskspeed, Caspar does not read and buffer much but your solution or ffplay probably does.

No, AFAIK Caspar does not use the GPU to render the video. It‘s mostly used for the mixer. So I think, that after the frame has been decoded it travels to the GPU, gets composited to other layers and is then send back to the CPU for output.

From your diag window and from what @hreinnbeck said it looks as Caspar has problems reading the video fast enough. For 4K playback I would try with a striped (RAID 0) set of SSD‘s, as these files tend to be quite large.

y, I was digging the source code all days

Compared to FFmpeg example source, I found it seems Caspar not using GPU to decode video at all

https://github.com/Julusian/ffmpeg-nuget-stable/blob/master/examples/hw_decode.c

I think developers of CasparCG must have some reason to avoid use GPU decoding on purpose since that GPU decoding tech appears for years and so does CasparCG.

From what I am now understanding, CCG use CPU to decode frame, send it to GPU for mixer, then send back to CPU for encoding or other output, if screen output is after this, then the frame will be sending back go GPU again (I see high GPU copy usage, guess it is doing what I am talking about?)

It just can’t be disk speed issue, I do have a pcie ssd raid0, but if disk speed is a issue, then I could test the video file on memory disk, but this file is only 120mbps, so it might be a CPU usage limitation there for decoding

anyway, I will be still trying to get this 4K video working, I am much appreciate if anyone have ideas for this

But from your screen shoot there is obviously something causing the underflow’s in the lower most graph. If it’s not the disk, then it’s something else.

the green line(frame-time) is always on the top

I think there could be 2 reason for that

1 disk speed too low to read file for decoding

2 decoding time is too long for one frame

based on the hardware that I am testing on, and CCG don’t use hardware accelerate

so I think 2 is the problem

I feel CCG limite CPU usage for decoding somehow, I am not sure, still looking the code

and I would try to use GPU decoding in CCG if I can understand C++ example codes later

so If there is an important reason for CCG develop to avoid GPU decoding, I am really interested to know that reason although I don’t think I can beat C++ code

at least I will try to make one 4K video being played well

I think the reason is quite simple: Most of the situations in which CCG is used, use codecs that are (and most certainly were) not supported by GPU accelerated decoders: these formats have either 4:4:4:4, 4:2:2:4 or 4:2:2 chroma subsampling and are very rarely plain-old H.264. As you can see here: https://developer.nvidia.com/video-encode-decode-gpu-support-matrix almost all GPUs do not support decoding of streams other than 4:2:0 subsampling. The added development cost was not offset by a lot of gain, since for both CCG developers the benefit of lowering CPU load for 4:2:0 encoded streams wasn’t very useful in their usecases.

hi, jstarzak

I don’t think it make sense, I know most of GPU can’t handle 444 decoding, but most CPU are not able to do this job either(that’s why ppl wants gpu support for this job)

So if what you said is the reason, then actually the reason is most of people don’t need to decode 420 video, they just use raw input 444/420(hdmi, sdi etc)

still, the problem now is that CCG from my PC are not able to play a single 120mbps bitrate 4K h264 video smoothly, this is not a high demands at all, CPU usage is around 10%, I am trying to figure out how to get this work even CPU based

If CPU usage is that low, why are you still not trying to check for other problems? If the CPU would be 100% it would make sense to complain about “not using the GPU”. With that low CPU load it can only be something else. So the discussion should change direction finally.

You gave your thought for slow disk, I tried memory disk and raid 0 ssd, same result, I don’t see any other suggestions.

I’ve given my thought, I think there are some kind of CPU limitation inside CCG

let’s say 10% per video decoding, and this 4K video file needs 15% CPU usage for decoding, so the probelm happens.

I am not sure, still working on the code,

I still wish to use GPU decoding, but I will be OK if I could get more powerful CPU to handle videos

So the problem that I can’t get over is 10% CPU usage still laggy on this single 4K video which means more powerful CPU might not be able to resolve it. trying to figure this out.

I just found the solution of laggy replay problem on github

there is some thread limite for some reason in the code, remove it then the 4K video will be played smooth, but as the developer said, it may also cause some more latency, just in case if some one meet the same problem and wants a solution